Human Factors & Accessibility: A Multi-Modal UX Research Study

The study is structured around three distinct but interconnected experimental missions:

Overview

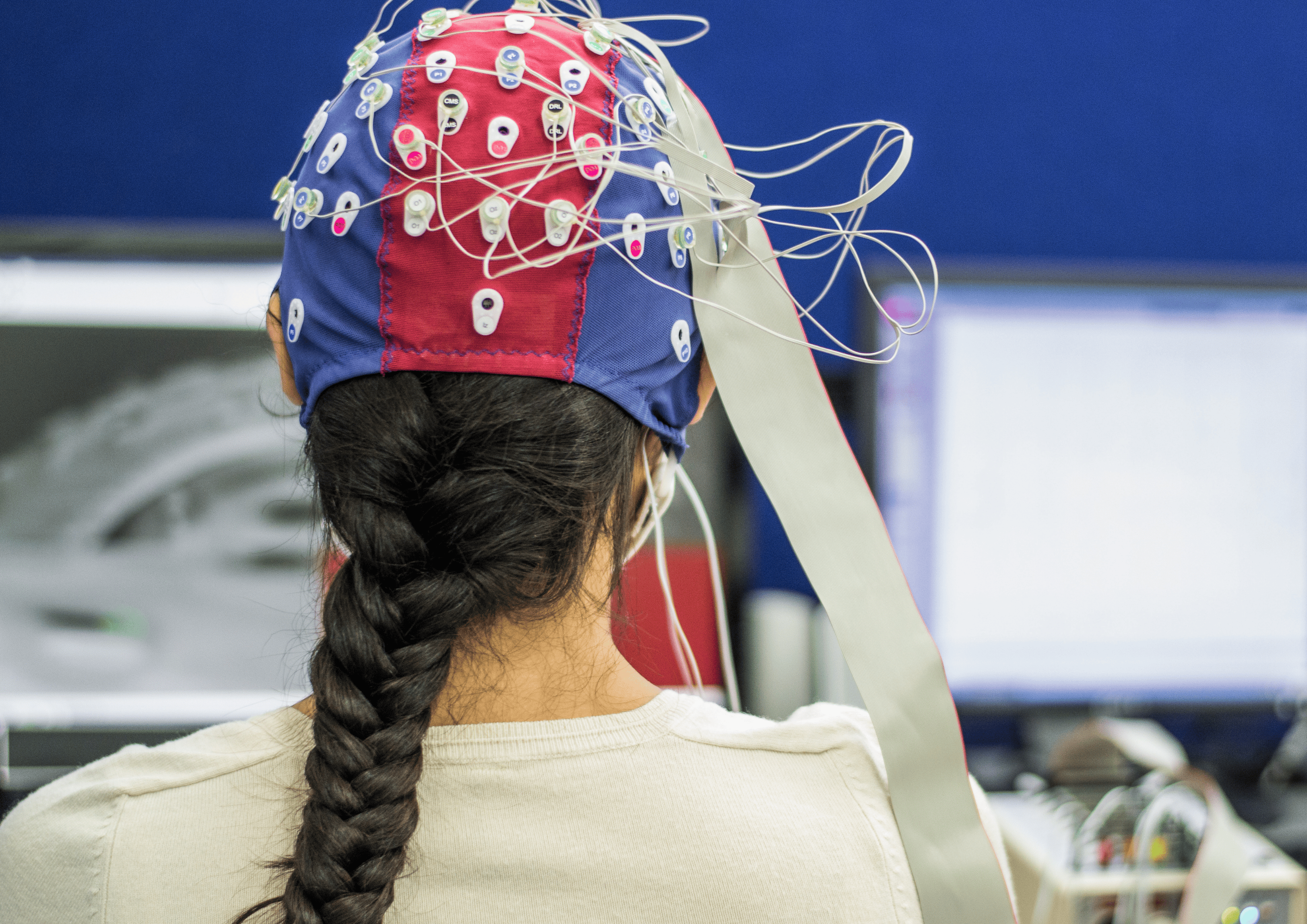

This project is a multi-modal UX research initiative that investigates how physiological, cognitive, and linguistic factors influence user experience across digital interfaces. This project integrates neuroscience tools and human-computer interaction (HCI) methods to uncover deeper insights into inclusive design.

Each study employs biosensors such as EEG (brain activity), EMG (muscle activity), ECG (heart rate), accelerometers (motion), and eye tracking to build a comprehensive picture of how real-world users engage with interfaces under different cognitive and physical conditions.

The goal of this project is to inform the design of more adaptive, inclusive, and cognitively sustainable digital experiences. By mapping physiological signals to interaction patterns, this research bridges gaps between usability, accessibility, and individual variability. The insights derived have direct applications in game design, e-learning platforms, e-commerce interfaces, and assistive technologies.

Each study employs biosensors such as EEG (brain activity), EMG (muscle activity), ECG (heart rate), accelerometers (motion), and eye tracking to build a comprehensive picture of how real-world users engage with interfaces under different cognitive and physical conditions.

The goal of this project is to inform the design of more adaptive, inclusive, and cognitively sustainable digital experiences. By mapping physiological signals to interaction patterns, this research bridges gaps between usability, accessibility, and individual variability. The insights derived have direct applications in game design, e-learning platforms, e-commerce interfaces, and assistive technologies.

Muscle engagement and physical strain

in mobile gaming interfaces, particularly for players with involuntary muscle tics.

Cognitive and emotional responses

of users with ADHD when interacting with dynamic content (e.g. social media) and focus environments (e.g. background music)

Visual attention and information processing

across native and non-native languages during reading and search tasks.

My contribution

As the lead UX researcher, I was responsible for driving every phase of the redesign process:

- Led the development of experimental frameworks, defined hypotheses, and ensured methodological coherence across all three studies.

- Assisted in setting up EEG, EMG, ECG, and eye-tracking equipment, and coordinated pilot tests to ensure data quality and participant safety.

- Conducted qualitative and quantitative data analysis using Python (MNE), Tobii Pro Lab, and EEG signal processing tools to extract meaningful behavioral and physiological patterns.

- Synthesized findings into actionable UX recommendations aimed at improving accessibility and engagement in digital environments.

Muscle Engagement and Tic Patterns in Racing Games

Objective

To examine the physical strain of prolonged mobile gameplay in users with motor tics and uncover how hand and forearm muscle activation correlates with control-intensive interface operation.

Method

We conducted a longitudinal experiment with a female participant diagnosed with congenital thumb tics.

EMG and accelerometer sensors were used to record muscle activity and movement direction during three gameplay sessions (5, 10, and 25 minutes) in the mobile racing game Monoposto Lite.

We focused on two key muscle groups: the flexor pollicis brevis (thumb) and flexor carpi radialis (forearm), measuring both voluntary input and involuntary tics.

EMG and accelerometer sensors were used to record muscle activity and movement direction during three gameplay sessions (5, 10, and 25 minutes) in the mobile racing game Monoposto Lite.

We focused on two key muscle groups: the flexor pollicis brevis (thumb) and flexor carpi radialis (forearm), measuring both voluntary input and involuntary tics.

Key findings

Increased Activation, Not Fatigue

The flexor pollicis brevis showed progressively higher activation over time without fatigue indicators—suggesting overuse rather than muscle exhaustion.

Tic Episodes Intensify Over Time

Involuntary thumb tics increased in frequency and intensity during longer gameplay sessions, with EMG spikes.

Thumb-Dominant Controls as a Risk Factor

Games relying heavily on repetitive thumb input may unintentionally cause overstimulation or reduced performance in users with motor sensitivities.

UX Implications

- Introduced a "Tic-Resilient Mode" that filters out involuntary movements via accelerometer data.

- Proposed tilt-based controls as an alternative input method to distribute motor strain.

- Suggested remappable and ergonomically spaced UI controls to minimize muscle overstimulation.

%20(1).png)

Attention and Engagement in ADHD Users

Objective

To understand how neurodivergent users with ADHD process different digital stimuli (e.g., social media content and background sound) during focus-related tasks, and how physiological responses reflect cognitive engagement and fatigue.

Method

This study consisted of two missions involving a female participant with ADHD, equipped with EEG (brain activity), ECG (heart rate), and EMG (leg movement) sensors:

- Mission 1: The participant scrolled through Weibo, Instagram, and TikTok while data was collected on attention span, emotional regulation, and habitual fidgeting.

- Mission 2: The participant read the same passage under three different auditory environments: meditation music, summer pop music, and brown noise. EEG data was analyzed for engagement and cognitive load.

Key findings

Video Content Holds Attention but Drains It Fast

Dynamic video formats (e.g., TikTok Reels) captured attention effectively but resulted in time blindness and accelerated cognitive fatigue.

Brown Noise Promotes Relaxed, Sustained Focus

EEG data showed dominant Delta and Theta waves, indicating that brown noise helped the participant stay calm and maintain attention over time.

Summer POP Music Increases Alertness but with Variability

While summer music boosted Beta activity (linked to cognitive engagement), it also led to inconsistent performance due to overstimulation.

Fidgeting as a Compensatory Mechanism

EMG data revealed that leg movement was actively used to self-regulate attention lapses, aligning with neurodivergent coping strategies.

UX Implications

- Proposed customisable auditory environments to support sustained focus through user-preferred stimuli.

- Recommended soft auditory reminders or haptic cues to help users manage time during immersive sessions.

- Suggested minimalist layouts, controlled content flow, and non-disruptive feedback loops to reduce cognitive load.

.png)

Cross-Linguistic Reading and Interface Preferences

Objective

To investigate how bilingual users interact with visual content and search for key information across interfaces designed in their native and secondary languages, using eye-tracking data to evaluate attention, comprehension, and search efficiency.

Method

This study consisted of two missions with a native Chinese speaker fluent in English:

- Mission 1: The participant searched for products across Amazon (English & Chinese) and TeMu (English). Eye-tracking metrics (fixations, scan paths, heatmaps) were used to measure navigation behaviour.

- Mission 2: The participant read two academic texts (one in English, one in Chinese) while eye-tracking software recorded reading flow, pause points, and re-reading behavior.

Key findings

Language Familiarity Boosts Task Performance

Task success rate improved on the Chinese-translated version of Amazon, compared to the English version highlighting the impact of language comfort.

Different Reading Strategies in Each Language

In English, participants engaged in word-by-word reading and re-reading (especially at sentence boundaries). In Chinese, scanning was broader and faster, with efficient keyword recognition.

Visual Content Supports Comprehension

Charts, diagrams, and contextual product images played a key role in aiding understanding—particularly in complex or second-language contexts.

UX Implications

- Proposed adaptive layout switching (e.g., image-rich vs. text-light modes) based on user language or cultural preferences.

- Advocated for decoupled language/region settings to support flexible browsing experiences.

- Recommended real-time keyword highlighting, hover-triggered translations, and visual annotations to support non-native readers.